Published

- 9 min read

How AI Tools Are Revolutionising Application Deployment

AI in application deployment tools are transforming how modern software reaches production, yet many DevOps teams still rely on manual and traditional deployment methods in 2025.

These outdated approaches often lead to slow rollouts, misconfigurations, and higher downtime. Conventional application deployment tools lack the intelligence and adaptability needed to keep pace with increasingly complex, multi-cloud environments.

Manual processes and static scripts create bottlenecks that limit innovation and increase the risk of failures. Without real-time insights and automation, teams spend valuable time firefighting issues instead of proactively preventing them.

Why Application Deployment Tools Need AI in 2025?

The complexity of software deployment has skyrocketed in recent years. According to a 2024 Gartner report, over 62% of software teams experience at least one failed deployment per month, primarily due to configuration errors or delayed rollback actions. These failures cost time, frustrate developers, and interrupt user experiences.

Also, over 75% of enterprises will adopt AI-powered DevOps tools by 2026 to accelerate software delivery and reduce operational risks.

Legacy tools weren’t built for this environment.

They were designed to automate tasks, not make decisions. That’s the gap AI fills and why AI in DevOps is becoming a foundational shift, not just a feature upgrade.

Want a deeper look at how AI tools are already being used in modern workflows? Here’s a step-by-step guide on using AI for app deployment.

How Are AI Tools Changing Application Deployment?

Modern applications run on microservices architectures across hybrid and multi-cloud environments, requiring dynamic scaling, rapid rollback, and near-zero downtime. Manual or rule-based deployment pipelines struggle to respond to the unpredictable nature of these environments, leading to increased failure rates and longer recovery times.

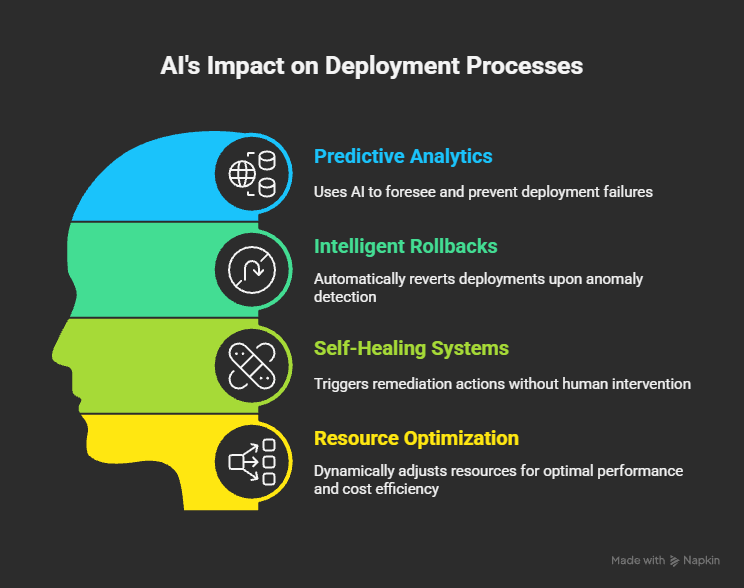

AI in deployment solves these challenges by automating decision-making processes using predictive analytics, anomaly detection, and adaptive automation.

For example, a survey from Forrester found that organisations using AI-driven deployment tools experienced a 30% reduction in deployment failures and a 20% improvement in deployment speed.

Let’s break down the most impactful changes AI brings to the deployment process:

Predictive Analytics: Stopping Failures Before They Happen

One of the most powerful applications of AI in deployment is predictive analytics.

By continuously analysing logs, metrics, and historical deployment data, AI models can identify patterns that precede failures.

For instance, Netflix’s use of machine learning in their deployment pipelines allows them to predict service degradation and automatically halt problematic releases.

Instead of waiting for incidents to occur, application deployment automation powered by AI can halt a risky deploy, reroute traffic, or alert developers before customers are impacted.

Intelligent Rollbacks and Self-Healing Systems

Traditional CI/CD pipelines rely on pre-scripted rollback logic, which may or may not reflect real-world app behaviour. AI-powered CI/CD systems, on the other hand, continuously observe your app’s health post-deployment and can initiate intelligent rollbacks automatically if anomalies are detected.

Example: Spotify implemented an AI-powered rollback system that automatically reverts releases when anomaly scores cross predefined thresholds, reducing Mean Time to Recovery (MTTR) by over 40%.

Self-healing systems go a step further. If a service crashes or performs poorly after an update, AI can trigger remediation, restarting the instance, reverting the change, or spinning up additional resources without human input.

How AI Optimises Resource Allocation in Real-Time

Resource allocation during deployment is another critical area benefiting from AI. Amazon Web Services (AWS) uses AI models to predict load and dynamically adjust container resources, ensuring optimal performance and cost efficiency. AI continuously tunes CPU, memory, and bandwidth provisioning during and after deployment based on real-time demand.

A McKinsey study found that companies employing AI for resource optimisation during deployment reduced cloud infrastructure costs by up to 25% while improving application responsiveness.

This type of deployment intelligence is especially useful in multi-environment setups (staging, dev, prod), where over-provisioning is common.

“AI-powered deployment tools transform the deployment pipeline from a risk-laden manual task into a predictive, automated, and self-correcting system, accelerating innovation and reducing downtime.” — Dr. Priya Natarajan, DevOps Researcher

What Are the Real-World Use Cases of AI in Application Deployment?

Teams across industries are already using AI tools for deployment to improve uptime, reduce manual work, and simplify complex rollouts.

Here’s how these tools are being used in production today:

From Manual Pipelines to AI-Enhanced CI/CD Workflows

Companies are moving away from hand-coded CI/CD pipelines toward AI-powered CI/CD systems that detect code changes, assess risk, and automate the rollout process end-to-end.

Key capabilities include:

Key capabilities include:

- Dynamic rollout control: AI adjusts the speed and scope of deployments based on live telemetry (e.g., latency spikes or error trends).

- Behavioural recommendations: Based on past deployment outcomes, AI suggests safer configurations or rollout strategies.

- Progressive delivery optimisation: Canary releases and blue/green deployments are fine-tuned on the fly using usage and performance data

Real-world impact: DevOps teams using Kuberns have seen up to 35% reduction in release time by automating testing and rollout orchestration through AI.

How Enterprises Are Reducing Downtime with AI?

For larger systems, even a minute of downtime can cost thousands. That’s why AI in DevOps is being adopted aggressively in enterprise environments. AI can detect early warning signals like memory leaks, traffic anomalies, or dependency failures and trigger automatic actions before incidents affect users.

Examples of AI-driven recovery features:

- Self-healing rollouts: When a release triggers regressions, the system autonomously rolls back and restores the last known good state.

- Root cause diagnostics: Instead of raw logs, AI surfaces interpreted explanations for what caused a deployment failure.

- Predictive rollback points: Instead of reverting blindly, the system calculates the safest and most stable rollback option based on behaviour patterns.

How Kuberns Is Leading the AI-Driven Application Deployment?

While most deployment platforms bolt AI on top of legacy systems, Kuberns was built from day one with an AI-first foundation. Its architecture is designed to remove the manual burden of DevOps, streamline CI/CD, and make real-time deployment decisions using intelligent automation.

While most deployment platforms bolt AI on top of legacy systems, Kuberns was built from day one with an AI-first foundation. Its architecture is designed to remove the manual burden of DevOps, streamline CI/CD, and make real-time deployment decisions using intelligent automation.

Here’s how it delivers on the promise of AI-powered application deployment:

Built for Speed, Stability, and Scalable Intelligence

Kuberns deploys your code directly from Git without requiring scripts, YAML files, or cloud configuration. Once your repo is connected, the platform:

- Detects your stack automatically

- Builds and containerises your app

- Deploys it across isolated environments

- Sets up HTTPS, logging, and autoscaling by default

It’s all zero-configurations. No need for a DevOps engineer or platform team to get started.

These capabilities not only improve the stability of production environments but also allow teams to scale deployments faster without increasing operational complexity.

Automating Rollouts, Healing Failures, and Optimising Environments

Kuberns is used by forward-thinking teams to solve specific deployment challenges with intelligent automation:

- Automated rollouts that adapt speed and scope depending on user traffic, load, or feedback loops.

- Self-healing deployments that detect regressions and automatically roll back or reconfigure the system.

- Environment optimisation that tunes resource allocations during rollouts to reduce overuse and cloud waste.

AI-First Architecture That Grows With You

The foundation of Kuberns is modular and extensible, allowing teams to integrate AI deployment logic into existing DevOps workflows with minimal friction. Key differentiators include:

- Pluggable AI policies that can be customised per service or environment

- Streaming telemetry engines for continuous learning and behaviour analysis

- Integration-ready APIs that support third-party observability and security platforms

With Kuberns, teams don’t just get smarter deployments, they get an ecosystem that learns, scales, and protects itself over time.

40% AWS Cloud Cost Savings Without Doing Anything

One of the biggest advantages of Kuberns is that it runs your application on optimised AWS infrastructure behind the scenes.

Its AI layer:

- Continuously tunes CPU/memory allocation

- Eliminates wasteful idle instances

- Ensures high availability without overprovisioning

Teams switching from traditional AWS setups (e.g., EC2 + EKS + manual autoscaling) report up to 40% lower cloud bills, with no changes to their app or infrastructure code.

Getting Started with AI Tools for Deployment: What’s Next?

Adopting AI tools for application deployment doesn’t have to be disruptive; it can begin with small, high-impact integrations into your existing CI/CD workflows.

Whether you’re a startup running on a single cloud or an enterprise operating across a hybrid infrastructure, AI can start delivering value quickly with minimal risk.

Tips for Migrating from Legacy Tools to Kuberns

If you’re currently using tools like Jenkins, GitHub Actions, or Kubernetes clusters with manual scripts, you’re probably dealing with:

- Complex YAML files

- Manual scaling and monitoring setups

- Siloed CI, CD, and infra processes that break under pressure

Here’s how to shift that workload to a platform like Kuberns in a few simple steps:

1. Connect Your GitHub Repository

2. Define Environments

3. Deploy and Let AI Handle the Rest

4. Iterate Faster with Built-in CI/CD + Monitoring

By switching to an AI-powered deployment platform, you future-proof your workflow and free your team to focus on product, not process.

Those who adopt now will define the new DevOps standards. Those who delay may find themselves caught in costly, legacy-driven bottlenecks.

Looking to reduce downtime, ship faster, and optimise cloud spend? Explore how Kuberns helps you deploy smarter, faster, and with AI confidence.